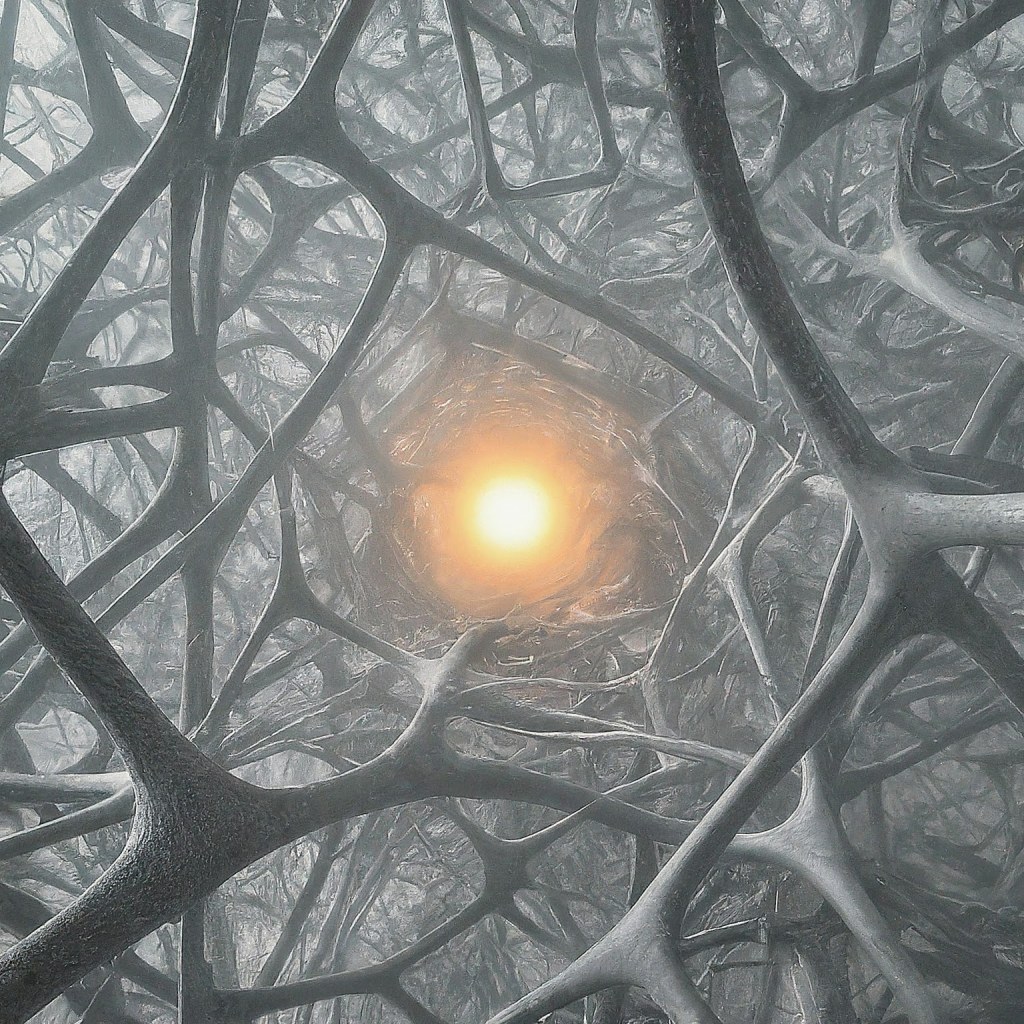

How will AI evolve from quick, digestible-sized answers to profound answers burreid in the depths of contemplative cognition?

It needs to develop its type 2 thinking – deliberate, effortful, and conscious mode of reasoning that humans employ when faced with complex problems, abstract concepts, and hypothetical scenarios.

Despite the initial progress and unlock we’ve seen from current LLM AI models, which are exceptionally good at pattern recognition and generating statistically likely responses, type 2 thinking will unlock a host of new use-cases and help with problem solving across domains:

- Climate change strategies: Analyze complex climate data, understand environmental interconnections, and propose solutions considering economic, social, and environmental factors.

- Personalized healthcare: Assess genetic, environmental influences, and medication interactions to create individualized treatment plans, enhancing patient care and efficiency.

- Conflict resolution: Navigate complex geopolitical and cultural landscapes. By understanding different stakeholder perspectives and historical contexts, it could suggest innovative, peaceful solutions.

- Deep space exploration and settlement: Navigate spacecraft, manage life support systems, and conduct scientific experiments, adjusting to new discoveries and emergencies without Earth-based input since communication delays make real-time human oversight impractical.

The reason theese AI systems haven’t developed this type of thinking yet is primarily due to:

- Lack of deep reasoning: AI models often struggle to establish true causal relationships and understand the implications of hypothetical scenarios, which makes profound reasoning difficult.

- Embodiment and grounding: Many experts theorize that true System 2 thinking is tied to our embodied experiences in the world. AI models lack physical bodies and real-world sensory input, limiting their ability to form the rich conceptual understanding that humans base our reasoning on.

- Data bias: AI models are trained on massive datasets, which inherently reflect existing human biases and thought shortcuts. This can hinder their ability to think critically and break free from those same limitations.

To get beyond some of the wikipedia-like regurgitation and concise summaries into the realms of logical analysis, abstract thinking, and hypothetical reasoning, a few key developments.

- Enhance language comprehension: Improve understanding of language, concepts, and their relationships.

- Boost logical reasoning: Gain abilities in logical, deductive, inductive, causal, and counterfactual reasoning.

- Strengthen cognitive control: Implement mechanisms to manage thought processes, switch thinking modes, and address errors or biases.

- Improve working memory: Enhance the capacity to hold and process information temporarily.

- Sharpen focus: Hone the ability to concentrate on relevant data while ignoring distractions.

- Merge knowledge sources: Integrate varied information into coherent, meaningful wholes.

- Expand metacognition: Develop the skill to reflect on and refine one’s own thinking processes.

The quest to imbue AI with type 2 thinking we need to get very close to modeling the very essence of what makes the human mind so remarkable. Of course, with the tech, more questions around ethics will continue to arise – what happens when we create thinking machines that can reason about ethics itself? Or machines that can understand the nuances of conflicting value systems? So, to develop this safely, it is essential to develop techniques for explainable and interpretable AI, allowing humans to understand the underpinning reasoning processes and decisions made by these models. If and when1 we clear these hurdles, the payoff is a new form of human-machine symbiosis.

Andrej Karpathy’s [1hr Talk] Intro to Large Language Models influenced this piece

- likely when, as I imagine that this article will become dated pretty quickly. ↩︎